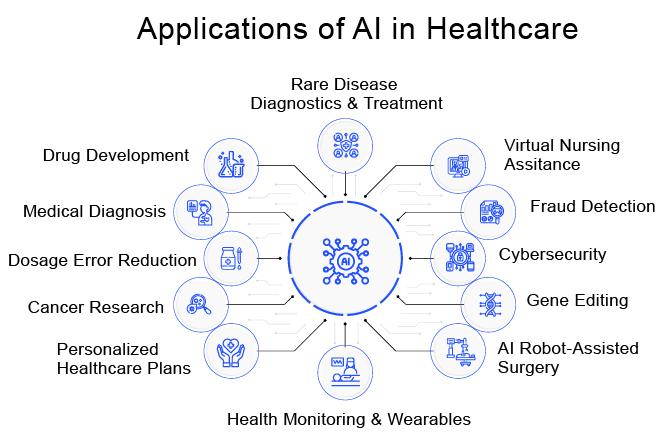

In an era where artificial intelligence (AI) is making important strides across various sectors, healthcare remains at the forefront of this technological revolution. During a recent discussion on AI and Healthcare, Dr. Nigam Shaw, a leading figure in biomedical data science at Stanford, emphasized the critical juncture at which the industry currently stands: while the potential of AI to enhance healthcare is immense, there is a pressing concern regarding the sustainability of its growth pipeline. Despite decades of research and advancements, Dr. Shaw pointed out that the methodologies and frameworks established in academic settings are not adequately equipped to scale impactful AI solutions into practical, widespread applications. As he articulates,we must navigate the complex landscape of AI advancement,recognizing that the journey from initial development to large-scale implementation demands a reevaluation of existing strategies and resources. The conversation, hosted by Mika Newton, delves into these vital issues, raising questions about the future of AI in healthcare and its ability to genuinely augment human capabilities rather than merely replicate them.

The Evolution of AI in Healthcare and Its Historical Context

The trajectory of artificial intelligence in the medical field reflects a rich history of innovation shaped by societal needs and technological advancements. From the early days of rule-based expert systems in the 1970s, AI in healthcare has transitioned through various phases, gradually evolving into complex machine learning algorithms capable of analyzing vast datasets. Today, algorithms can predict patient outcomes and optimize treatment plans, showcasing how computational methods have integrated into clinical workflows. The journey has not been without challenges; historical skepticism towards AI’s reliability, data privacy concerns, and the need for rigorous validation have all influenced its adoption. As Dr. Nigam Shah notes,these historical contexts illustrate the careful balance between innovation and ethical considerations in deploying AI solutions within healthcare settings.

The significance of interdisciplinary collaboration cannot be overstated in the evolution of AI technology for healthcare applications. Strengthening partnerships among data scientists, healthcare professionals, and policy makers is essential to develop robust frameworks that facilitate safer and more effective integration of AI tools. The future demand for AI-driven solutions necessitates a commitment to continual education, not just for developers but also for clinicians, who must be equipped to interpret and utilize AI-generated insights effectively. As emphasized by Dr. Shah, fostering an environment that encourages experimentation and adaptability will be pivotal in overcoming existing barriers, ensuring that AI becomes a transformative force in enhancing patient care rather than merely a theoretical concept.

Challenges in Developing Sustainable AI Solutions for Medical Applications

One of the most pressing issues in harnessing AI for healthcare is the proliferation of data biases that can skew results and exacerbate health disparities. These biases often originate from unrepresentative datasets, which can lead to algorithms that perform poorly for marginalized populations. Furthermore, data collection practices frequently prioritize certain demographic groups over others, thereby neglecting the diverse needs of the entire population. To address these concerns, stakeholders must focus on rigorous data audits and continuously enhance the diversity of datasets employed in training models to ensure equitable healthcare solutions. This approach not only safeguards against inequities but also fosters trust within communities, which is essential for accomplished AI integration in clinical settings.

Regulatory challenges also pose significant hurdles to the development of sustainable AI applications in medicine. Navigating complex compliance requirements while ensuring that innovations keep pace with rapid technological advancements can be daunting for developers. Additionally, the inherent unpredictability of AI systems complicates the establishment of clear-cut guidelines for approval and monitoring. A collaborative effort between AI technologists and regulatory bodies is vital to streamline the approval process without stifling innovation. By working together to create adaptable and forward-thinking regulations, the healthcare sector can better support the integration of AI technologies, balancing the need for safety and efficacy with the urgency for innovative solutions.

Bridging the Gap: From Research to Real-World Implementation

To ensure that AI technologies transition smoothly from theoretical frameworks to practical applications in healthcare, a thorough understanding of real-world constraints is necessary. This entails not only accounting for the technical prowess of AI models but also recognizing the cultural and operational nuances of healthcare environments. As Dr. Shaw has highlighted, dedicated teams must engage with clinicians on the ground to align AI functionalities with daily medical practices. An effective strategy includes:

- Iterative Design: Continuously refining AI tools based on user feedback and clinical outcomes.

- Integration Testing: Assessing how AI can coalesce with existing healthcare infrastructure without causing disruption.

- Stakeholder Engagement: Actively involving all relevant parties in discussions about the potential impacts and benefits of AI applications.

The path to successful implementation rests on creating multidisciplinary teams that blend technical knowledge with healthcare expertise. By fostering dialog across various stakeholders—including data scientists, healthcare providers, patients, and regulatory agencies—there lies a greater prospect to address challenges collectively. Furthermore, as AI solutions inch closer to direct patient interaction, developing frameworks that prioritize clarity and accountability will be crucial. This holistic approach not only elevates the standard of patient care but also instills a sense of confidence in AI-driven innovations within the healthcare sector.

Evaluating AI Utility: Moving Beyond Traditional Validation Methods

One critical aspect to consider in assessing the effectiveness of AI in healthcare is the need for innovative evaluation frameworks that go beyond conventional validation techniques. Traditional methods often focus on statistical accuracy and performance metrics; however,these metrics may not adequately reflect the real-world utility of AI systems. For instance, the incorporation of User-Centered Design principles into AI evaluation can reveal how well these systems integrate into healthcare workflows.This approach requires actively engaging end-users, including physicians and patients, in the validation process to gather insights on usability, efficiency, and overall satisfaction. Moreover, leveraging large-scale, longitudinal studies can provide richer data on the impact of AI technologies on patient outcomes, which can guide ongoing refinements to these systems.

AI’s potential in healthcare further underscores the importance of establishing metrics that encompass ethical considerations, especially concerning transparency and fairness. Stakeholders should work towards developing a set of evaluation criteria that incorporate social equity factors, ensuring that AI solutions benefit all patient populations equally. This expanded evaluative lens could entail assessing algorithms for bias mitigation, interpretability, and stakeholder trust. By aligning AI validation processes with broader healthcare goals, innovators can not only demonstrate the tangible value of AI tools but also navigate the ethical implications of their deployment. Such proactive strategies will ultimately facilitate the responsible integration of AI technologies, fostering an ecosystem where both practitioners and patients can thrive.