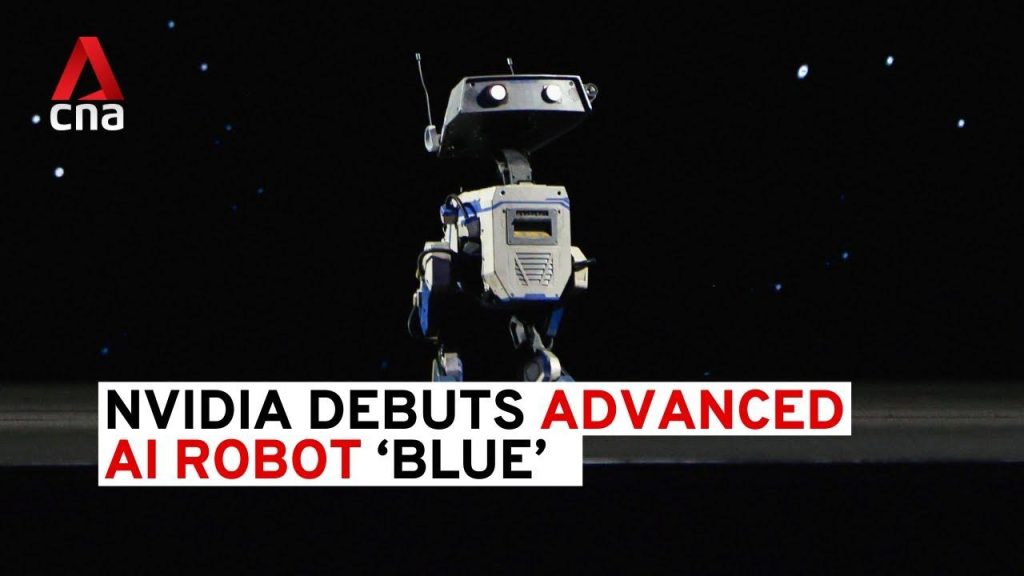

In a groundbreaking announcement today, three powerhouse companies—DeepMind, Disney Research, and Nvidia—revealed a revolutionary collaboration called “Newton.” The partnership aims to push the boundaries of robotics and artificial intelligence by integrating advanced technologies into real-time simulations. During an engaging keynote event, a showcase featured an interactive physics engine named “Blue,” designed to optimize robotics training and enhance tactile feedback with remarkable precision. As the discussion heated up, it became clear that this venture is not only about innovative simulations, but also about transforming industries. Notably, Nvidia has teamed up with General Motors to develop AI infrastructure for self-driving vehicles, signifying a major leap towards the future of autonomous transportation. With Nvidia’s newly launched Dynamo system boasting unprecedented computational capabilities, the stage is set for a new era in AI manufacturing, enterprise solutions, and clever automotive systems.

Next-Generation Robotics Through Collaborative Innovation

The groundbreaking focus on collaborative innovation has revolutionized the potential of robotics, making notable strides in machine learning applications. This evolution is fueled by the integration of advanced AI capabilities into the core functionalities of robots, allowing them to operate with heightened efficiency and adaptability. Key elements contributing to this innovation include:

- The development of real-time decision-making algorithms that enhance robotic responses to dynamic environments.

- Interconnected systems designed to facilitate seamless communication between robots and human operators.

- Advanced simulation platforms that provide realistic training scenarios, improving the robots’ learning curves.

Furthermore, companies like Nvidia are pioneering the use of next-gen chips that drive these intelligent systems. Such advancements enable robots to engage in complex tasks across various sectors, from manufacturing to healthcare, by utilizing deep learning techniques that substantially improve performance metrics. This focus on precision translates not just into better industrial applications but also impacts consumer robotics, where personal assistant bots can now learn through interactions, becoming more useful over time.

Transforming Autonomous Vehicles with Advanced AI Infrastructure

The integration of cutting-edge AI technologies is reshaping the landscape of autonomous vehicles,enabling sophisticated functionalities that were previously unimaginable. At the core of these advancements lies a suite of powerful tools and architectures designed to enhance both the operational capabilities and safety of self-driving systems. Key aspects of this transformation include:

- Enhanced sensor fusion that integrates data from multiple sources to create a complete view of the vehicle’s surroundings.

- Machine learning models that continuously adapt to new driving scenarios, significantly improving decision-making processes.

- Edge computing solutions that provide real-time analytics,ensuring that critical data is processed where it’s generated,reducing latency.

As the automotive industry ventures deeper into AI integration, Nvidia’s latest chips exemplify the power of this technology, capable of processing vast amounts of data with remarkable speed. These innovations can drive improvements in features such as route optimization, pedestrian detection, and vehicle-to-vehicle communication. The potential for such systems extends beyond enhanced safety and efficiency; it sets the stage for a future where smart vehicles can learn and evolve autonomously, interacting with their environments in ways that mimic human-like intuition.

Unleashing AI Capabilities in Manufacturing and Enterprise

The recent unveiling of an AI-powered robotic solution at GTC 2025 highlights a paradigm shift in manufacturing capabilities and enterprise operations. Leveraging the potency of next-generation chips developed by Nvidia, these robots are crafted to carry out intricate tasks with unparalleled precision. This transformation is driven by several foundational innovations, including:

- Adaptive learning systems that allow robots to improve their performance based on real-time data and experience.

- Robust safety protocols that ensure human workers can collaborate with robots in shared spaces securely.

- Advanced object recognition that equips robots with enhanced environmental awareness, facilitating smoother operations.

The implications of these advancements in robotics extend far beyond mere automation; they redefine operational efficiency across various sectors. Enterprises that harness the capabilities of AI-driven robots are poised to witness significant boosts in productivity,optimizing processes from assembly lines to logistics. By integrating machine vision and decision-making algorithms, companies can expect a future where robots not only perform repetitive tasks but also participate actively in complex problem-solving and adaptive workflows.

The Revolution of Real-Time Simulation in AI Development

The integration of real-time simulation technologies is reshaping AI development by enabling faster iterations and testing of robotic systems. This advancement allows engineers and developers to create and refine machine learning models in dynamic virtual environments that closely mimic real-world scenarios. Key components driving this innovation include:

- Instantaneous feedback loops that provide data on robotic performance during simulations, leading to rapid adjustments and improvements.

- Scalable simulation environments that can accommodate various scenarios, from simple tasks to complex interactions with human agents.

- Multi-agent simulations that allow for testing of robots in collaboration with one another, enhancing cooperative behaviors and overall efficiency.

With these real-time simulations,developers are empowered to overcome traditional limitations in AI training processes. Notably, the ability to visualize the implications of design choices and algorithms in real-time fosters a stronger understanding of potential outcomes. This significantly reduces the risks associated with deploying AI systems in critical applications like healthcare and autonomous vehicles, ensuring that they are not only capable but also reliable and safe in their operational contexts.