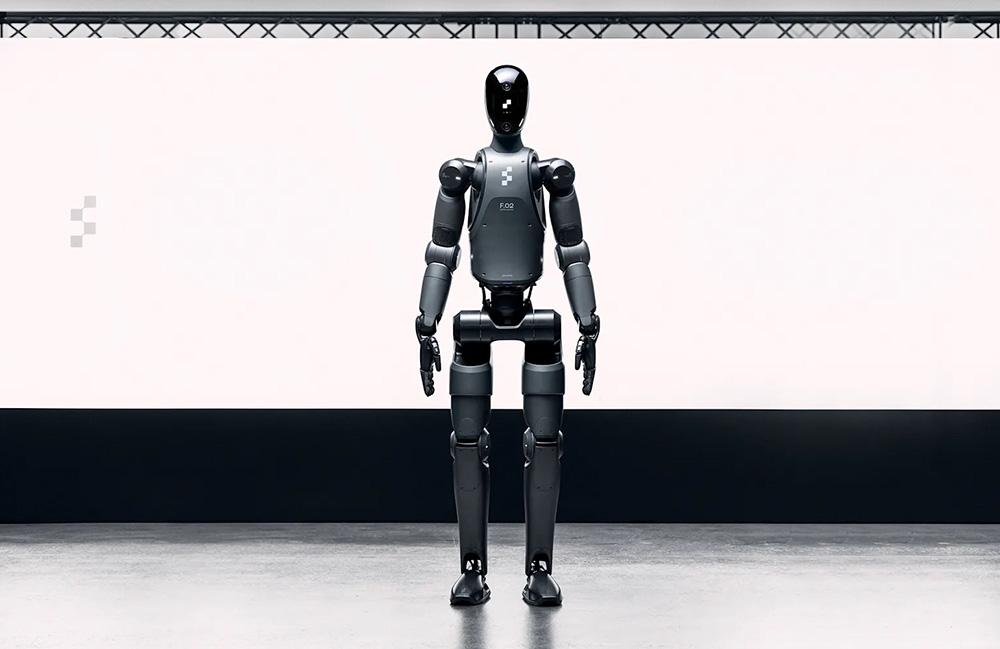

In a critically important advancement in robotic vision technology, the Helix S1 has been unveiled, showcasing a elegant stereo vision system that sets it apart from its monoinocular predecessor. Leveraging the power of two integrated cameras, the Helix S1 employs multiscale feature extraction networks coupled with a cross attention transformer, enabling it to achieve unparalleled depth perception and environmental understanding. This innovative approach promises to enhance performance in various applications, from autonomous navigation to advanced surveillance, marking a considerable leap in the capabilities of robotic systems. As the industry eagerly anticipates the implications of this cutting-edge technology, experts are keen to explore the potential it holds for the future of machine vision.

Understanding Stereo Vision Technology in Helix S1

The Helix S1’s stereo vision technology is anchored on its dual-camera configuration, which enables the system to capture a complete array of visual data. By utilizing advanced algorithms tailored for 3D mapping and object recognition, it excels in rendering intricate details of the surrounding habitat.This capability is vital for applications requiring precise spatial awareness, notably in dynamic settings such as automotive manufacturing. With its enhanced pixel accuracy and depth resolution, the Helix S1 can effectively delineate between various objects and obstacles, ensuring safety and efficiency in operational workflows.

Moreover, the integration of deep learning techniques allows the Helix S1 to continually improve its performance through real-time data analysis. As it processes inputs from both cameras, the system dynamically adjusts to varying light conditions and obstacles, enhancing its adaptability. The potential applications extend beyond traditional robotic functions; its implementation could considerably impact sectors like logistics, where precise navigation and obstacle detection are paramount.By redefining how machines interpret their surroundings, the Helix S1 stands at the forefront of the evolution in vision-based automation technologies.

The Role of Multiscale Feature Extraction Networks

The advanced capabilities of the Helix S1 stem from its innovative multiscale feature extraction networks, which facilitate the simultaneous analysis of multiple levels of detail within an image. This architecture allows the system to recognize and interpret features from coarser to finer resolutions effectively. By employing a hierarchical approach,it enhances object recognition accuracy and provides a deeper contextual understanding of the environment. Key benefits of this technology include:

- Enhanced Precision: The network’s ability to detect and interpret minute details significantly improves the system’s situational awareness.

- Dynamic Adaptability: The multiscale extraction process ensures that the Helix S1 can robustly respond to various environmental conditions, optimizing performance on the fly.

- Improved Efficiency: By rapidly processing and analyzing multiple feature scales, the network reduces latency and accelerates decision-making in critical scenarios.

This sophisticated processing capability is crucial for applications that demand real-time insights, such as assembly line support and quality control in automotive manufacturing. The integration of multiscale networks in the Helix S1 empowers it not only to identify parts and components with exceptional accuracy but also to monitor production workflows efficiently. Moreover, the implementation of this technology signifies a substantial advancement in the realm of artificial intelligence, opening new avenues for seamless integration of AI systems within traditional manufacturing environments, thereby enhancing productivity and safety standards across the board.

Enhancing Visual Processing with Cross Attention Transformers

The Helix S1’s cutting-edge architecture incorporates cross attention transformers that significantly refine how visual data is processed. By focusing on relevant features across different frames, the system can create a more cohesive understanding of its surroundings. This mechanism not only enhances object detection but also improves the model’s ability to track movement and changes within a scene, making it particularly beneficial in environments like automotive manufacturing where precision is critical. The utilization of attention mechanisms allows for the dynamic allocation of computational resources, concentrating efforts on the most informative parts of the visual input, thus optimizing performance without compromising speed.

Such advancements pave the way for integrating advanced AI applications in various sectors beyond manufacturing. With the ability to analyze and synthesize data effectively, the Helix S1 can be employed in diverse scenarios, including smart logistics, security, and autonomous vehicles. This versatility stems from its adeptness at cross-referencing multiple data points, enabling it to refine its operational strategies on the go. By fostering an environment where machines can learn and adapt in real-time, the system represents a significant milestone in enriching visual processing capabilities, setting new benchmarks for responsiveness and accuracy in robotic systems.

Implications for Future Applications in Visual Systems

The integration of the Helix S1 AI system in production facilities like BMW’s heralds a new era in visual system applications, significantly impacting fields such as logistics and automotive safety. By harnessing the power of its sophisticated visual processing capabilities, organizations can benefit from:

- Automated Quality Assurance: Enhanced object recognition allows for real-time quality checks, minimizing human error during manufacturing.

- Streamlined Operational Efficiency: The system’s ability to navigate complex environments seamlessly simplifies workflows, reducing downtime caused by misalignment or errors.

- Adaptable Robotics: With the Helix S1’s advanced learning algorithms, robots can continually adjust to new challenges, making them suitable for various production lines without extensive reprogramming.

Moreover, the implications extend beyond manufacturing efficiency; they encourage a shift towards more intelligent and responsive systems across industries. As AI and vision technology converge, future applications may include:

- Autonomous Delivery Drones: By integrating this technology, drones could navigate and deliver packages with newfound precision, even in challenging environments.

- Smart Home Automation: Enhanced visual perception can redefine home security systems, allowing for more accurate detection of movements and activities.

- Urban Infrastructure Monitoring: Cities could employ these AI systems for real-time monitoring of critical infrastructure, identifying potential risks proactively.