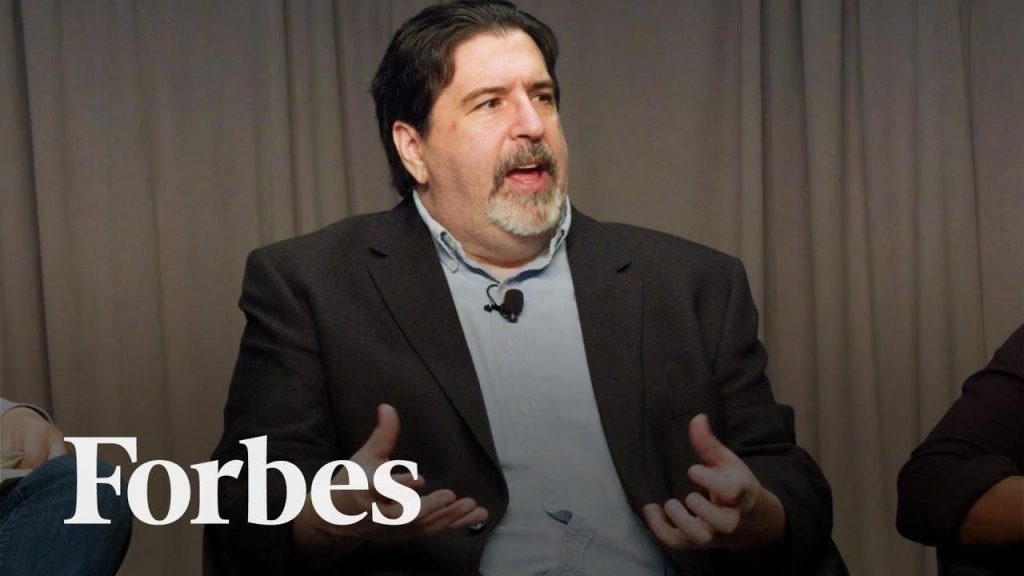

In a world where artificial intelligence is rapidly evolving, the need for effective governance and dialog among AI communities has never been more pressing. Michael DEA,director of the groundbreaking Oslo for AI project,recently addressed a panel of experts,highlighting the critical gaps in our understanding of intelligence and the regulation of AI systems. With only a handful of attendees expressing confidence in the current governance models, DEA urged participants to engage in deeper conversations about the potential risks associated with AI. “If we were going to be doing really careful thoughtful governance work, we would have better answers to all of those questions,” he noted. The panel, featuring insights from former CIA political analyst Dennis Gieon and technology leaders from innovative companies like Latimer, aims to unpack these complexities and explore nontraditional approaches to AI governance, setting the stage for a much-needed dialogue on the future of artificial intelligence and its oversight.

Exploring the Current State of AI Governance

As organizations grapple with the implications of AI bias, industry insiders emphasize the importance of multi-stakeholder collaboration in shaping effective governance frameworks. Experts from a range of sectors posited that governance must extend beyond mere compliance towards cultivating a culture of accountability within AI advancement teams. This approach can entail:

- Establishing clear ethical guidelines that are regularly updated

- Creating cross-functional teams that include ethicists,technologists,and community representatives

- Leveraging data audit techniques to identify and mitigate biases proactively

Moreover,panelists pointed out that transparency is crucial for public trust in AI systems. By openly sharing methodologies and the decision-making processes behind AI algorithms, organizations can reassure users and stakeholders. As highlighted in the discussions, fostering an environment where feedback is actively sought can aid in innovation while enhancing governance mechanisms. Consistency in ethical training and awareness sessions for developers could also pave the way for more ethically aligned AI solutions, ultimately promoting better societal outcomes.

Identifying Challenges in AI Understanding and Consensus

One of the predominant obstacles in fully grasping AI bias lies in the disparity of knowledge surrounding data sourcing and its intrinsic limitations. Industry specialists argue that biases frequently enough originate not only from the algorithms but also from the datasets used to train AI systems.These datasets can manifest a variety of issues,including historical inaccuracies,insufficient diversity,and outdated information.Stakeholder discussions emphasized the necessity for a multi-dimensional evaluation of input data to identify these shortcomings.By fostering collaborations between technologists and sociologists,organizations may unveil unseen biases impacting decision-making processes and develop strategies to address them.

Additionally, the challenge of consensus among AI practitioners complicates collaborative efforts to combat bias. Many experts highlighted the lack of unified terminologies and frameworks that create dissonance in discussions around ethical standards. Without a common language, initiatives aimed at creating standards for responsible AI development become fragmented. To overcome this challenge, industry leaders stress the importance of establishing comprehensive platforms for sharing best practices and guidelines among organizations. Such platforms could facilitate a more cohesive approach to understanding AI implications and drive a collective effort toward more equitable AI solutions across various sectors.

Innovative Approaches to Enhance AI Regulatory Frameworks

To bolster AI governance, experts recommend implementing dynamic oversight mechanisms that can adapt to the evolving nature of AI technologies. This includes integrating advanced monitoring systems capable of real-time analysis of algorithmic outputs to detect bias as it occurs. By harnessing techniques such as machine learning for anomaly detection, organizations can develop responsive strategies that mitigate bias before it amplifies. Engaging diverse stakeholders in the oversight process not only fortifies governance but also enhances the adaptability of frameworks to various contexts and local sensitivities.

Furthermore, industry leaders advocate for the establishment of regulatory sandboxes, which provide a controlled environment for the experimentation of AI technologies under regulatory supervision. These sandboxes allow developers to test AI systems while receiving feedback from regulators and ethicists, ultimately fostering a collaborative atmosphere.They can facilitate a deep dive into the complex interdependencies of technology,ethics,and societal norms,enabling organizations to refine AI frameworks and assist regulators in understanding the nuances of emerging challenges in AI bias.

The Role of Diverse Perspectives in Shaping AI Policy

A growing number of industry professionals advocate for incorporating varied perspectives into AI policy development to effectively address bias. By engaging experts from diverse fields—such as social sciences, law, technology, and ethics—policymakers can gain a more holistic understanding of the implications of AI systems. These varied viewpoints provide critical insights into the social impact of AI, helping to identify potential blind spots that may be overlooked by technologists alone. Key strategies for enhancing dialogue and collaboration include:

- Facilitating interdisciplinary workshops to bridge knowledge gaps

- Incorporating community feedback in policy refinement processes

- Encouraging the participation of marginalized voices in discussions

Moreover, fostering an inclusive environment leads to innovative solutions for mitigating AI bias. By establishing partnerships with academia and civil society, industry stakeholders can leverage extensive research and empirical data to inform better policy decisions. Encouraging a culture of openness and vulnerability in discussions around AI governance helps to foster mutual respect and shared objectives. As collaborations flourish, the collective wisdom gained from various domains will enhance AI frameworks, ultimately leading to policies that promote fairness and accountability among stakeholders.